Component: Data Export

Introduction

Data export component is the general component that supports the user to set up a job to export the data into a specific format (CSV for now) by setup a data collections, transform rule. After exporting the data, the user can monitor the exporting process using the data file management.

Features

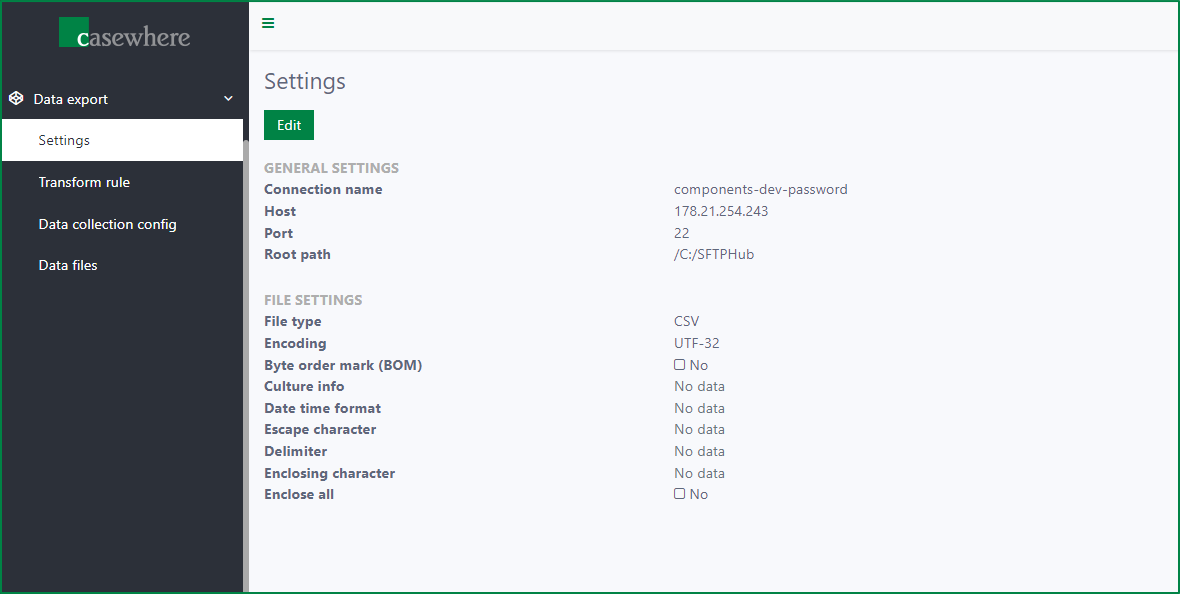

Settings

Settings page is used to manage the SFTP connection where all the data export files will be uploaded after the data export process is successfully completed, and the settings for exported files.

The page displays the saved settings in read-only state. You can edit the settings. However, creating a new setting or deleting the setting is not supported.

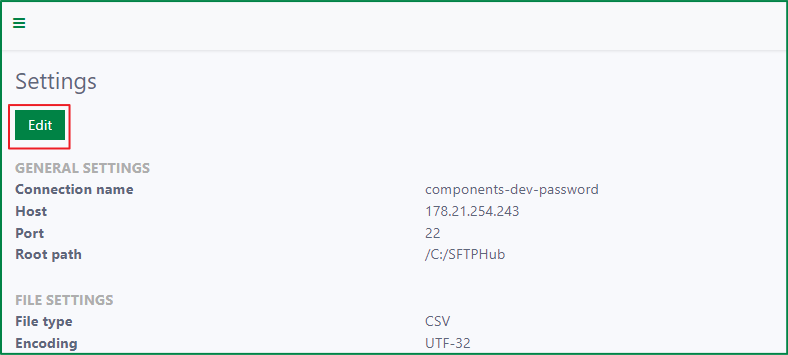

Edit setting

To edit the setting, click on Edit button

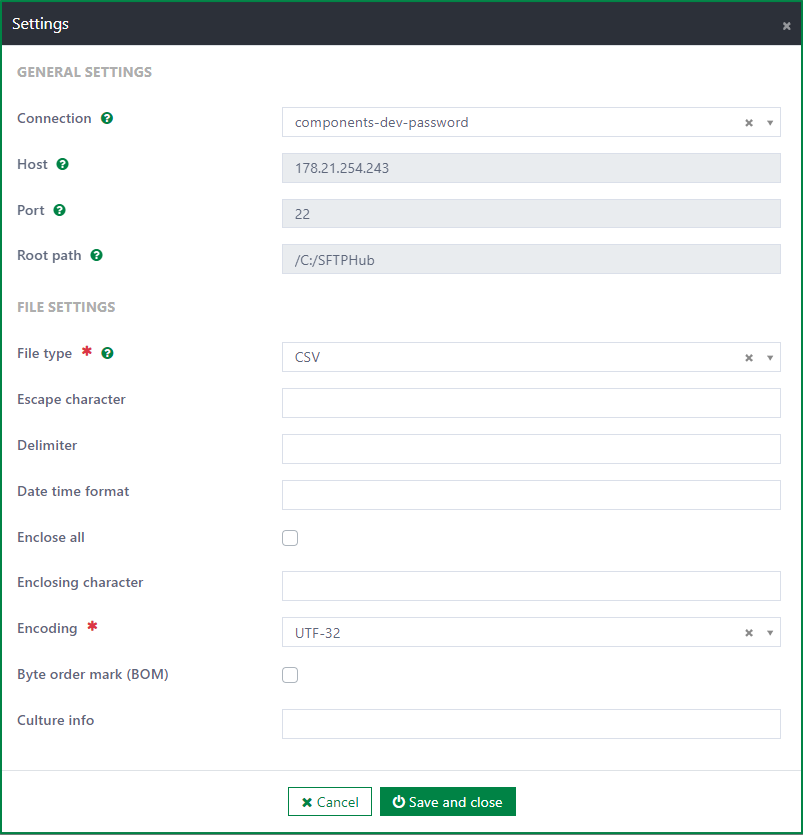

After that, the Edit Settings dialog will be opened with two sections: General settings, and File settings

General settings

The General settings section is used to configure the SFTP connection. It includes 4 fields:

- SFTP Connection: This field is required. It is a dropdown list with all SFTP connections in system. You must select 1 connection.

- Host: This field is always disabled and auto filled according to the selected SFTP connection.

- Port: This field is always disabled and auto filled according to the selected SFTP connection.

- Root path: This field is always disabled and auto filled according to the selected SFTP connection.

Whenever the SFTP connection is changed, the Host, Port, Root path field s will be also changed.

To learn more about how to setup SFTP connection, read SFTP hub.

File settings

The File settings section is used to configure the settings relating to the exported data files. If those settings are not configured, the CSV settings will be applied. If you set up those settings, the CSV settings will be overridden.

File type

- This field is required.

- It is a dropdown list with all file types, for now, only CSV is supported.

- After the file type CSV is selected, the additional fields are displayed.

Escape character

This field is optional and only displayed if file type is CSV.

The field is used to define the escape character in CSV data file. The default escape character in CSV is double quote if the field is not specified. If it is specified, the specified escape character will be used instead of the default one.

If a field value contains a character which is the same to the enclose character, then, the escape character will be added in front of the character to identify it with the enclose character.

E.g: if the Address field value is Block B ; Tower C ; 123.st, the escape character is double quote, and the enclose character is semicolon, then, in the data file, the double quote will be added in front of the semicolons within the field value, and whole value will be enclosed by 2 semicolons. And, the Address field value will be displayed in data file as ;Block B "; Tower C "; 123.st;

Delimiter

- This field is optional and only displayed if file type is CSV.

- The delimiter character is used to separate fields in CSV file.

- The delimiter character is specified as colon by default. If the delimiter character is not specified, the delimiter will be defined following the Culture info field value.

- E.g: If Culture info is da-DK, the delimiter character is semicolon . If Culture info is en-DK, the delimiter character is colon.

Date time format

- This field is optional and only displayed if file type is CSV.

- The date time format is specified as dd.MM.yyyy HH:mm. If the Date time format field is not specified, the date time format will be defined following the Culture info field value.

- E.g: If culture info is en-GB, the date time format is dd MMMM yyyy HH:mm:ss.

Enclose all

This field is optional and only displayed if file type is CSV.

By default, the checkbox is unticked. It means not all fields will be enclosed.

If the checkbox is ticked, all fields and header in the file will be enclosed.

Enclosing character

- This field is optional and only displayed if file type is CSV.

- The default enclose character is double quote if it is not specified. If it is specified, the specified character will be used instead of the default one.

- Only the field with some special characters will be enclosed. Those are:

- The field value contains delimiter character.

- The field value contains enclosing character.

- The field value contains leading space.

- The field value contains trailing space.

- The field value contains line breaks.

Encoding

- This field is required and only displayed if file type is CSV.

- The field stipulates the encoding of the output file.

- User must select one value from the list of encoding types. The list includes values:

- UTF-8

- UTF-32

- Unicode

- Big Endian Unicode

- ASCII

Byte order mark (BOM)

- This field is optional and only displayed if file type is CSV.

- The checkbox is unticked by default. When the checkbox is ticked, the data file will include BOM.

Culture info

- This field is optional and only displayed if file type is CSV.

- It is the culture information of output file. The default value is current culture information of the web server. The field value will override the plugin configuration if it is specified.

- The Culture info value stipulates the delimiter character and date time format in the file if these fields are not specified. The decimal separator also depends on the Culture info value.

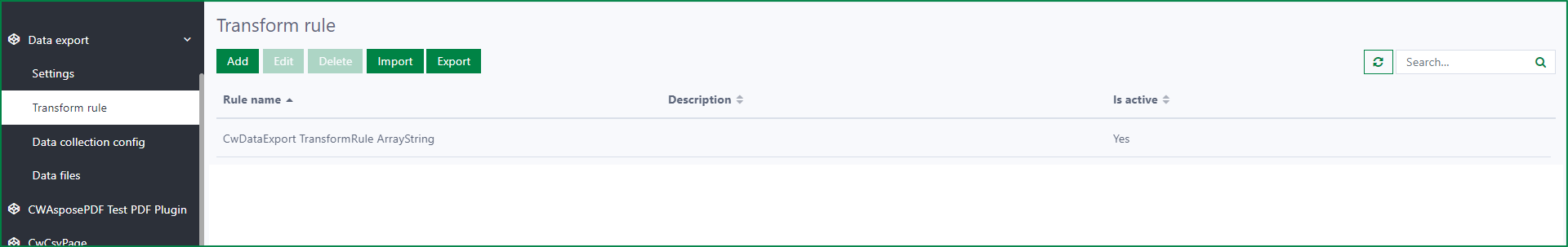

Transform rules

Transform rule is used for data transformation. Transform rules page is used to manage all the transform rules. You can add, edit, delete, import, export the transform rules.

Transform rules list

In the Transform rules list, you can view the information of:

Rule name: is the configured name of the rule. The rule name is unique.

Description: is the description of the rule. It is usually used to describe the information of the rule.

Is active: is status of the rule. If a rule is active, it can be used in the configuration of Data collection configs.

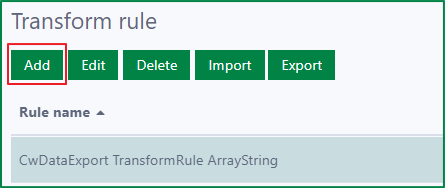

Add a new transform rule

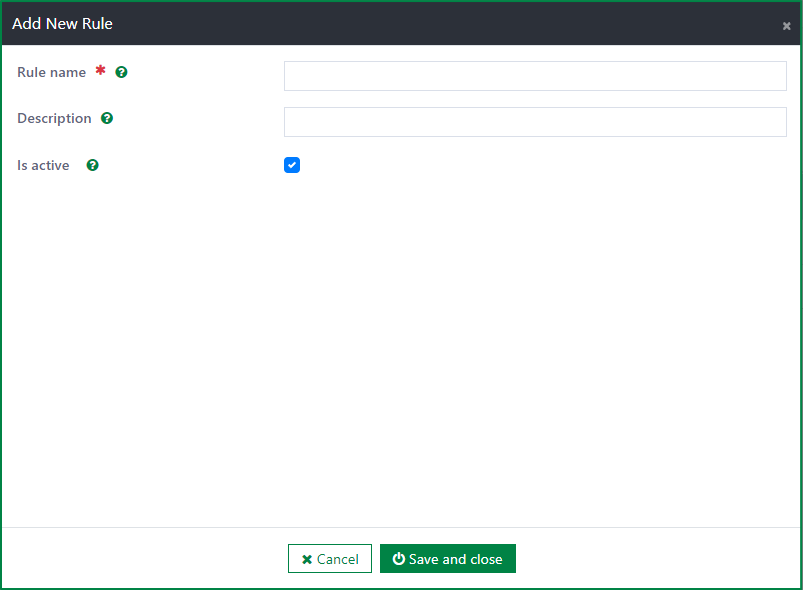

To add a new transform rule, first, click on the the Add button to open the Add new rule dialog

Then, input value for the following fields:

Rule name

This field is required.

The rule name should be the same to the relevant rule name which was added in backend.

Description

This field is optional.

This field is used to describe the information of the rule.

Is active

- If this checkbox is ticked, the rule will be active and can be used in Data collection configs.

- Else, the rule will be hidden in the configuration dialog of Data collection configs.

After filling out the fields, click on the Save and close button to add the rule.

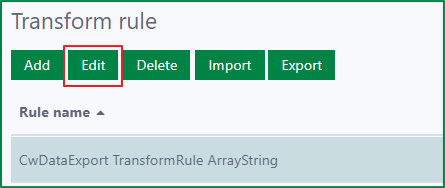

Edit a transform rule

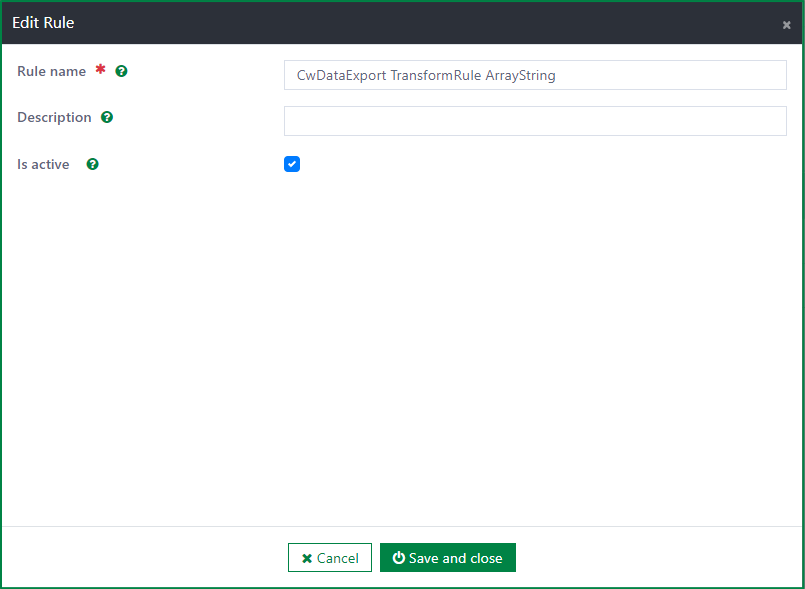

To edit a transform rule, first, select a rule, then click on the Edit button to open the Edit Rule dialog

In the Edit Rule dialog, you can update the fields:

Rule name

Description

Is active

After updating the fields, click on the Save and close button to update the rule. If you do not want to save the changes, click on the Cancel button.

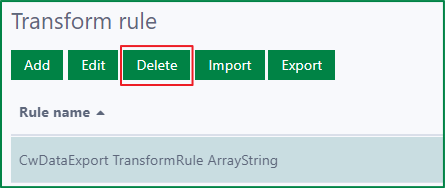

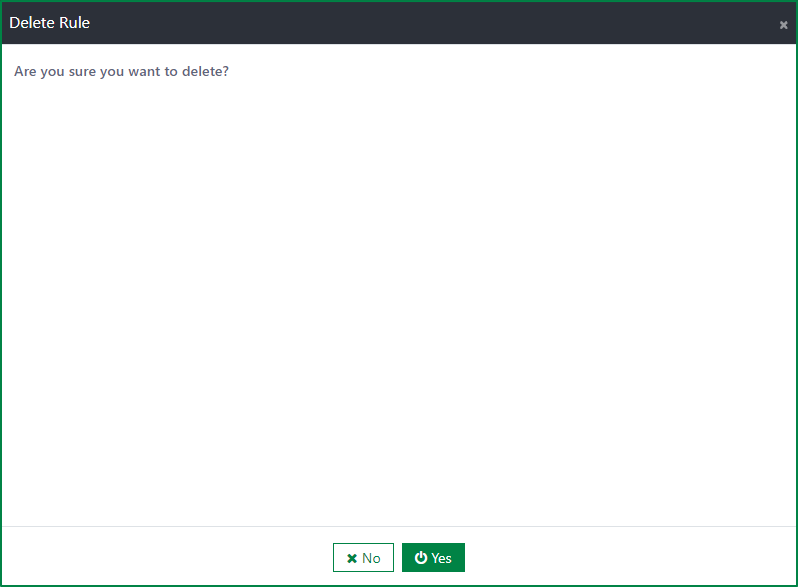

Delete a transform rule

To delete a transform rule, first, select a rule. Then, click on the Delete button

If the rule is not used for any Data collection configs, a confirmation dialog will be shown. If you select the Yes button, the rule will be deleted immediately. If you select the No button, the deletion will be cancelled.

If the rule was already used in a Data collection configs, the warning message dialog will be displayed to inform the disallowed deletion

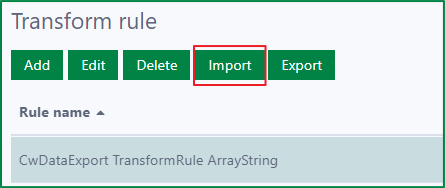

Import the transform rules

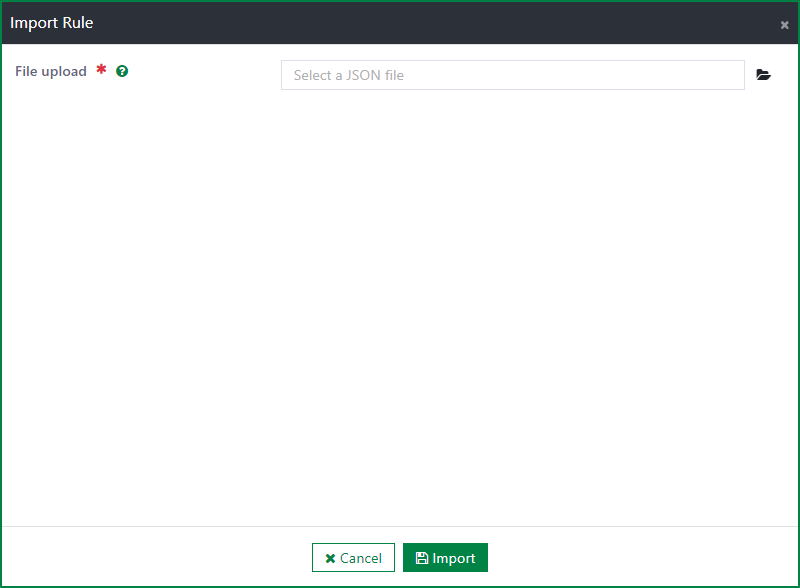

To import the transform rules, first, click on the Import button to open the Import Rule dialog

Then, click on the placeholder of the File upload field to open file selection dialog

Next, select a JSON file to import (multiple selection is not supported). After selecting the file, click on the Import button to import the rules from the JSON file. If you do not want to execute the import process, click on Cancel button.

If the imported transform rule has the same name to an existing one in the Transform rules page, the existing one will be overridden by the new one.

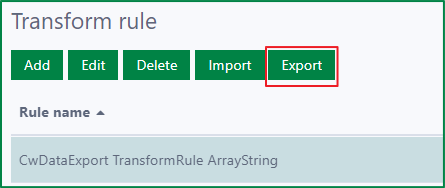

Export the transform rules

To export 1 or several rules in the list, select the rules, then click on the Export button. Then, the JSON file of the selected rules will be downloaded immediately.

To export all rules in the list, click on Export button. Then, the JSON file of all rules will be downloaded immediately.

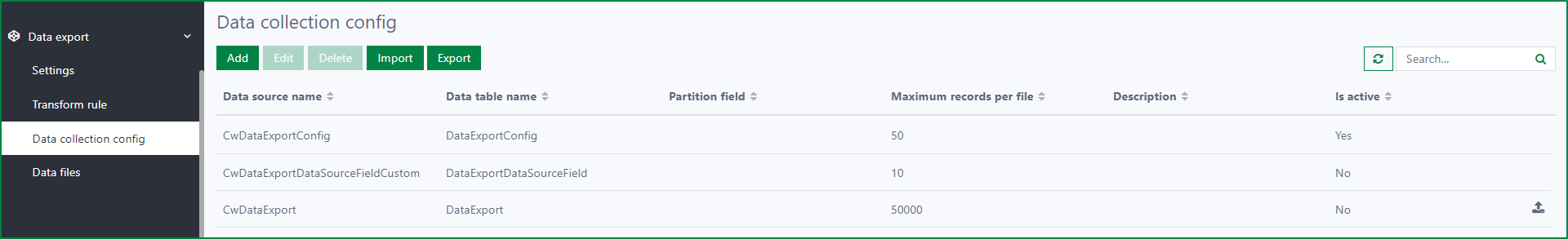

Data collection configs

Data collection config is the configuration for the data included in the exported data file, such as: data source, maximum records…

In Data collection configs page, you can view all the created configs, also add, edit, delete, import, export the configs.

Data collection configs list

In Data collection configs list, you can view all configs with the information of:

Data source name: is name of the data source selected in the config

Data table name: is the input data table name of the data source

Partition field: is the date time field which is used as condition to query data

Maximum records per file: is the number of maximum records can be included in 1 file

Description: is the description of the config. It is usually used to describe the information of the config.

Is active: is status of the config. If the config is active, the relevant data files will be sent whenever the scheduler job is run.

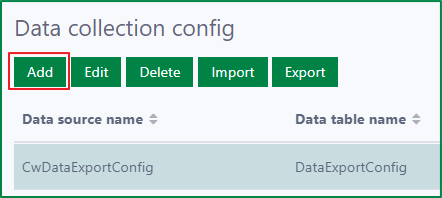

Add a new Data collection config

To add a new Data collection config, first, click on the Add button

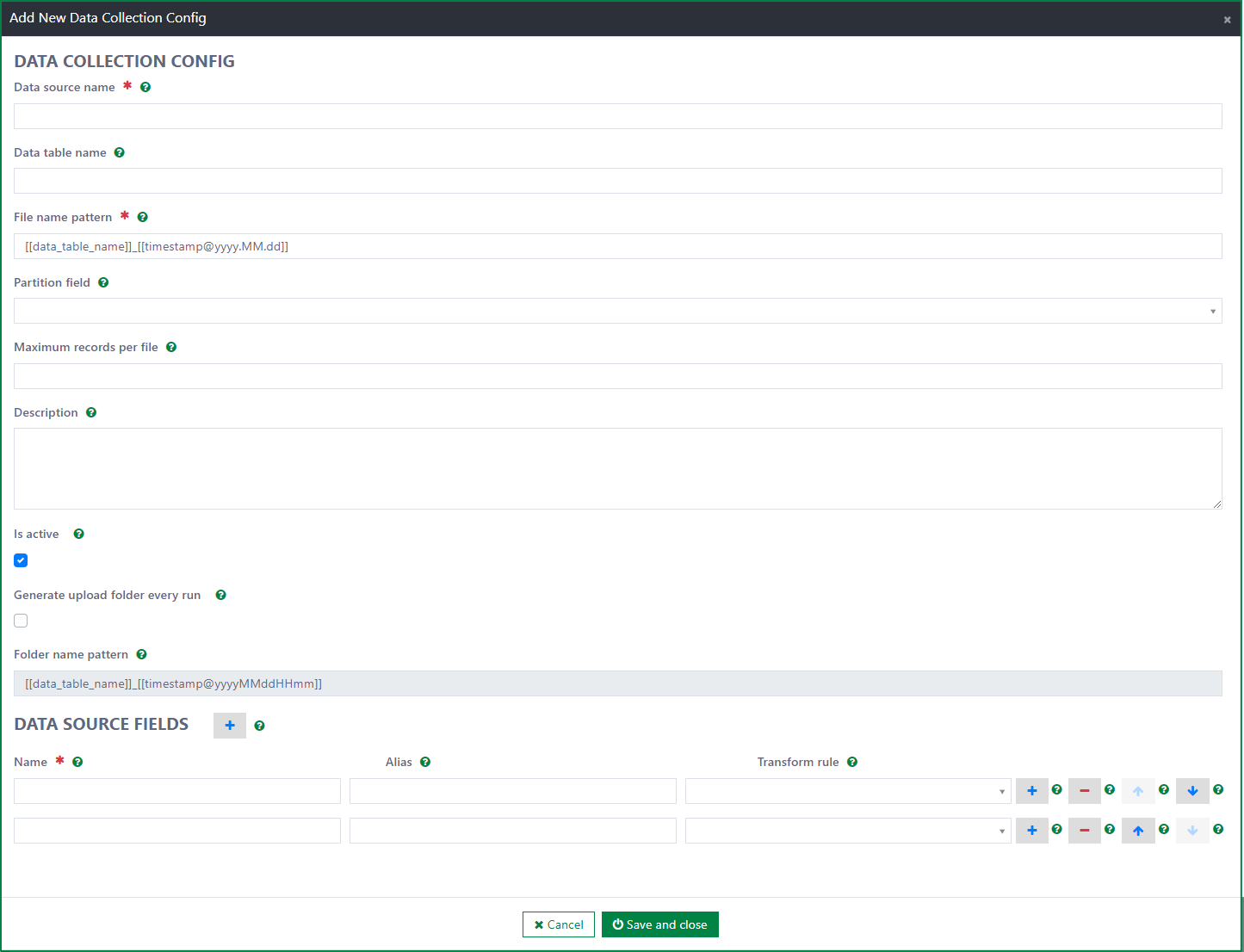

Then, in Add Data collection config dialog, fill out the fields:

Data source name

This field is required.

You must input name of the data source.

List of Data source fields and Partition fields will be generated according to the input data source name.

Data table name:

- This field is optional.

- Data table name can be used as an element in generating exported file name. If Data table name is empty, Data source name will be used in exported file name instead.

- This field must not include the following characters |*:"<>/?

File name pattern

- This field is required.

- The field is used to configure the name of the exported file.

- The default file name pattern is [[data_table_name]]_[[timestamp@yyyy.MM.dd]]

- The pattern must not contain any of the following characters \ / : * ? " < > |. These characters are disallowed characters in a file's path.

- You can add a fixed file name, or configure a dynamic file name by using the following elements in file name pattern:

- [[data_table_name]]: Use it if you want to insert the data table name into file name. If data table name is empty, the data source name will be inserted instead.

- [[timestamp@

]] : Use it if you want to insert the timestamp into file name.is used to define the specific format for the timestamp, e.g: [[timestamp@yyyy.MM.dd HH:mm]] - [[file_index]]: Use it if you want to insert the index of the file into file name. If you do not specify it, the system will add the index at the end of the file name automatically in case the data are separated to multiple files.

Partition field

- This field is optional.

- List of Partition fields includes all the date time fields belonging to the input data source name.

- Partition field is used as a date time filter to query data. If the partition field is specified, system will query data having partition field value greater than the partition field value of last record in the previous exported file for the new exported file.

- E.g: Partition field is SubmittedDate. The SubmittedDate of last record in the previous exported file is 31.05.2023 23:50. Then, in new exported file, only records having SubmittedDate from 31.05.2023 23:51 are included.

Maximum records per file

- This field is optional.

- The field defines the maximum records can be included in 1 file. If the record amount exceeds the maximum number, the data will be separated to multiple files.

Description

- This field is optional. This field is used to describe the information of the config.

Is active

- If this checkbox is ticked, the relevant data files will be sent whenever the scheduler job is run.

Generate upload folder everyday

- If the checkbox is ticked, new folder will be created everyday to store the relevant exported file.

Folder name pattern

- This field is optional if the Generate upload folder everyday checkbox is unticked.

- It will be mandatory if the Generate upload folder everyday checkbox is ticked.

- The field is used to configure the folder name that contains the exported files.

- Folder name patter must not contain the following characters \ / : * ? " < > |

- You can add a fixed folder name, or configure a dynamic folder name with the following elements:

- [[data_table_name]]: Use it if you want to insert the data table name into folder name. If data table name is empty, the data source name will be inserted instead.

- [[timestamp@

]] : Use it if you want to insert the timestamp into folder name.is used to define the specific format for the timestamp, e.g: [[timestamp@yyyy.MM.dd HH:mm]]. If it is not specified, system will insert the timestamp to folder name automatically with format yyyyMMddHHmm.

Data source fields:

- By clicking on + button, the additional fields to fill the information of the data source field will be displayed. You can add multiple data source fields. After adding the data source field, you must select the attribute field, and configure additional information for it with the following fields:

Name:

- This field is required.

- You must select 1 attribute from the list

- List of attribute fields is retrieved from the selected Data source.

Alias

- This field is optional.

- This field is used to input the alias for the selected attribute field.

- In the exported file, the Alias is used as header of the attribute field. If Alias is not specified, the attribute field name will be used instead.

Transform rule

- This field is optional.

- This field is used to define the transformation rule for the data of the attribute in the exported file.

- List of transformation rules is retrieved from the Transform rules page.

- By clicking on + button, the additional fields to fill the information of the data source field will be displayed. You can add multiple data source fields. After adding the data source field, you must select the attribute field, and configure additional information for it with the following fields:

After filling the information, click on Save and close button to complete adding the config.

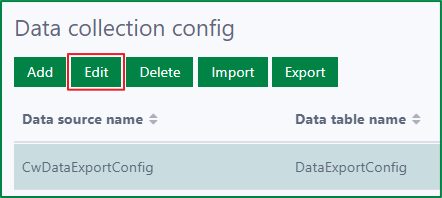

Edit a Data collection config

To edit a Data collection config, first, click on Edit button to open Edit Data collection Config dialog

In the Edit Data collection Config dialog, you can edit:

Data source name

Data table name

File name pattern

Partition field

Maximum records per file

Description

Is active

Generate upload folder everyday

Folder name pattern

Data source fields:

- Name

- Alias

- Transform rule

After updating the information, click on Save and close button to save the changes. Or you can click on Cancel button to discard the changes.

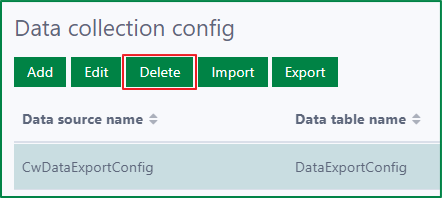

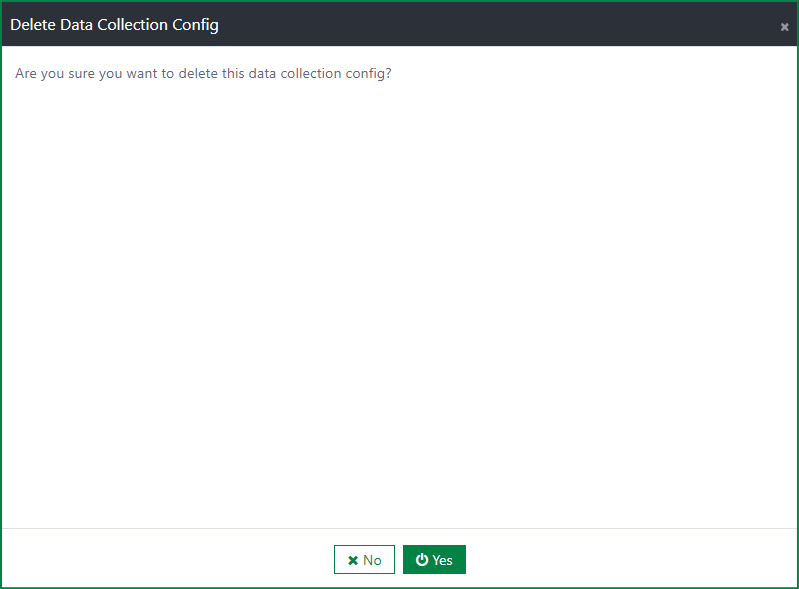

Delete a Data collection config

To delete a Data collection config, first, click on the Delete button

Then, a confirmation dialog will be displayed. If you select the Yes button, the config will be deleted immediately. If you select the No button, the deletion will be cancelled.

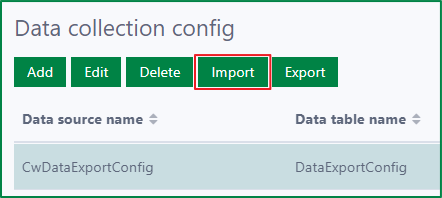

Import the Data collection configs

To import the Data collection configs, first, click on the Import button to open the Import Data collection Configs dialog

Then, click on the placeholder of the File upload field to open file selection dialog

Next, select a JSON file to import (multiple selection is not supported). After selecting the file, click on the Import button to import the rules from the JSON file. If you do not want to execute the import process, click on Cancel button.

If the imported data collection config has the same name to an existing one in the Data collection configs page, the existing one will be overridden by the new one.

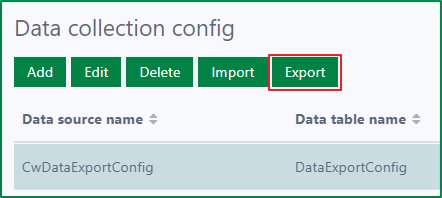

Export the Data collection configs

To export 1 or several configs in the list, select the configs, then click on the Export button. Then, the JSON file of the selected configs will be downloaded immediately.

To export all configs in the list, click on Export button. Then, the JSON file of all configs will be downloaded immediately.

Scheduler job overview

The Scheduler job will get the data based on the active Data collection configs and only get the data that has a Partition field within the range from the last successful run until the current time. The data is sorted ascending by the Partition field. If no Partition field is defined, then the system will get all the data.

In the background, the job will calculate and divide the data into multiple files based on the maximum record per files value.

After finishing the preparing data process and all of the CSV files are generated successfully, the system will trigger the uploading process and uploaded to the SFTP server which is configured in Settings after the Scheduler job is run successfully. If there are one or more files is generated fail, the job will stop working.

During uploading, if there is one more file is uploaded failed, the job will revert the uploading process by removing the successfully uploaded file to the SFTP server.

All data files and status are managed in the Data files page.

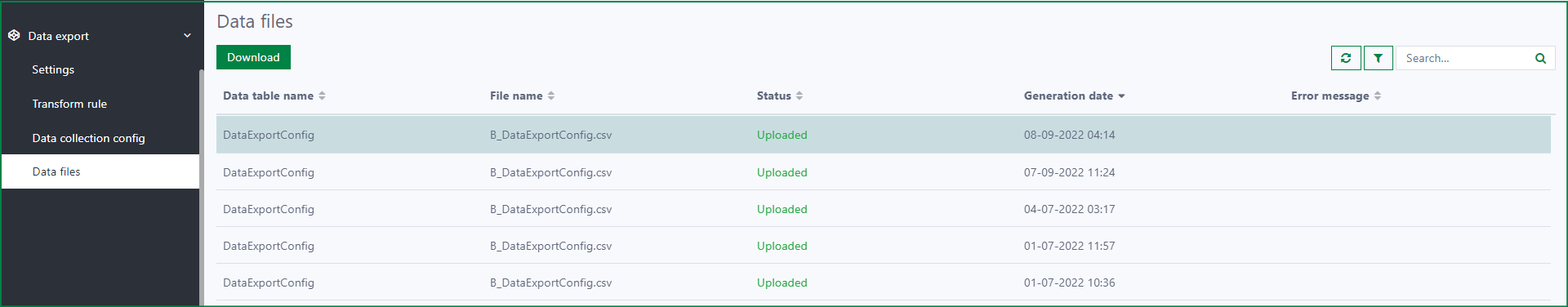

Data files management

Data files page is used to manage the data files which are uploaded to the SFTP server. In this page, you can view all the data files with the status and other information (file name, generated date, etc.), and download the data files.

Data files list

In Data files list, you can view the information of the data files, they are:

Data table name: is the configured Data table name in the relevant Data collection configs. If Data table name is not configured, Data source name will be displayed instead.

File name: is the name of the uploaded data files. If there are multiple data files generated from a Data collection config at one job running time. The data files will have the numeral suffix, for example: yyyy.MM.dd_data table name(s)_1.csv, : yyyy.MM.dd_data table name(s)_2.csv

Status: is the status of the data files. There are 7 statuses:

- New: the data file has just been created.

- Preparing: the data file is in preparation.

- Preparing failed: the preparation for the data file has failed.

- Prepared: the preparation for the data file has completed.

- Uploading: the uploading of the data file to SFTP server is in progress.

- Uploading failed: the uploading of the data file to SFTP server has failed.

- Uploaded: the data file has been uploaded to SFTP server successfully.

Generation date: is the date time when the data file preparation has started.

Error message: is the error message when the data file preparation or data file uploading processes has failed.

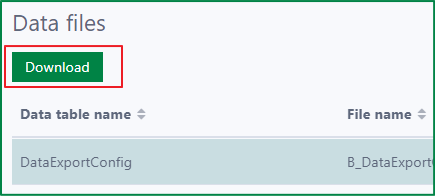

Download data files

You can only download the data files after it is prepared successfully. The download-able files has one of these status: Prepared, Uploading, Uploading failed, Uploaded. Only 1 data file can be downloaded at a time.

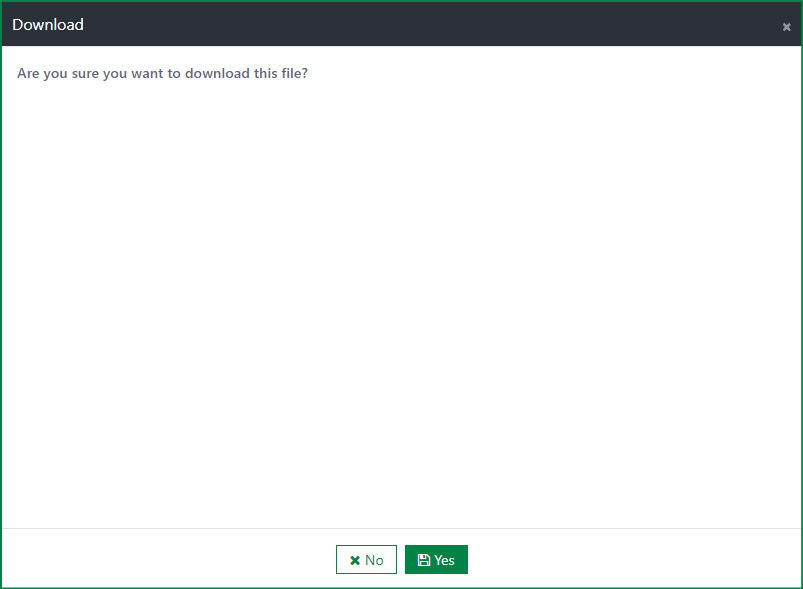

To download the data file, first, select the data file and click on the Download button

After that, a confirmation dialog will be opened. To continue downloading the file, click on the Yes button. If you want to cancel the downloading, click on the Cancel button.

Installation

Requirements

- Casewhere 2.6.0 or later.

Configuration

- Import products: Casewhere SFTP Hub, Product-Cw CSV and Casewhere Data Export

- Link the administration page to your worker sites and configure access control

- Configure the SFTP hub folder and SFTP connection

- Configure the Data Export settings

Dependencies

Releases

1.1.0 - 12/04/2022

Changelog

- Support file settings

- Support reordering data source fields

- Support showing data source fields in the dropdown

- Support showing header for empty CSV file

- More friendly UI for data file management

Download (login required): Data Export v1.1.0

1.0.0 - 24/02/2022

Changelog

- Data export settings

- Data collection configuration

- Transform rules management

- Data files management

Download (login required): Data Export v1.0.0

Roadmap

- More format

- Support different data storage: Sharepoint, Azure storage rather than SFTP